Writing quality test items often takes considerable time, primarily because traditional item-writing strategies involve extensive effort by subject-matter experts (SMEs). A commonly used method to speed up the process without compromising item quality is automated item generation (AIG)—a process where item writers develop templates, or cognitive models, for creating complete items.

While the item template approach to AIG has proven useful for generating knowledge-based items with clear correct answers, it only offers as much variability as there are templates. For item types where variability and natural-sounding language are required, such as those for personality inventories or situational judgment tests (SJTs), a more flexible approach is needed.

At HumRRO, we have successfully used natural language processing (NLP) to generate test items for a variety of assessment types. Based on this expertise, we have developed an innovative interface for on-demand automated generation of test items using finely tuned natural language understanding and generation (NLU/NLG) models. The NLU aspect of NLP allows computers to understand the nuance inherent in human speech, which feeds into NLG models that can write natural-sounding language—in this case, variable test items—that reflects such nuance.

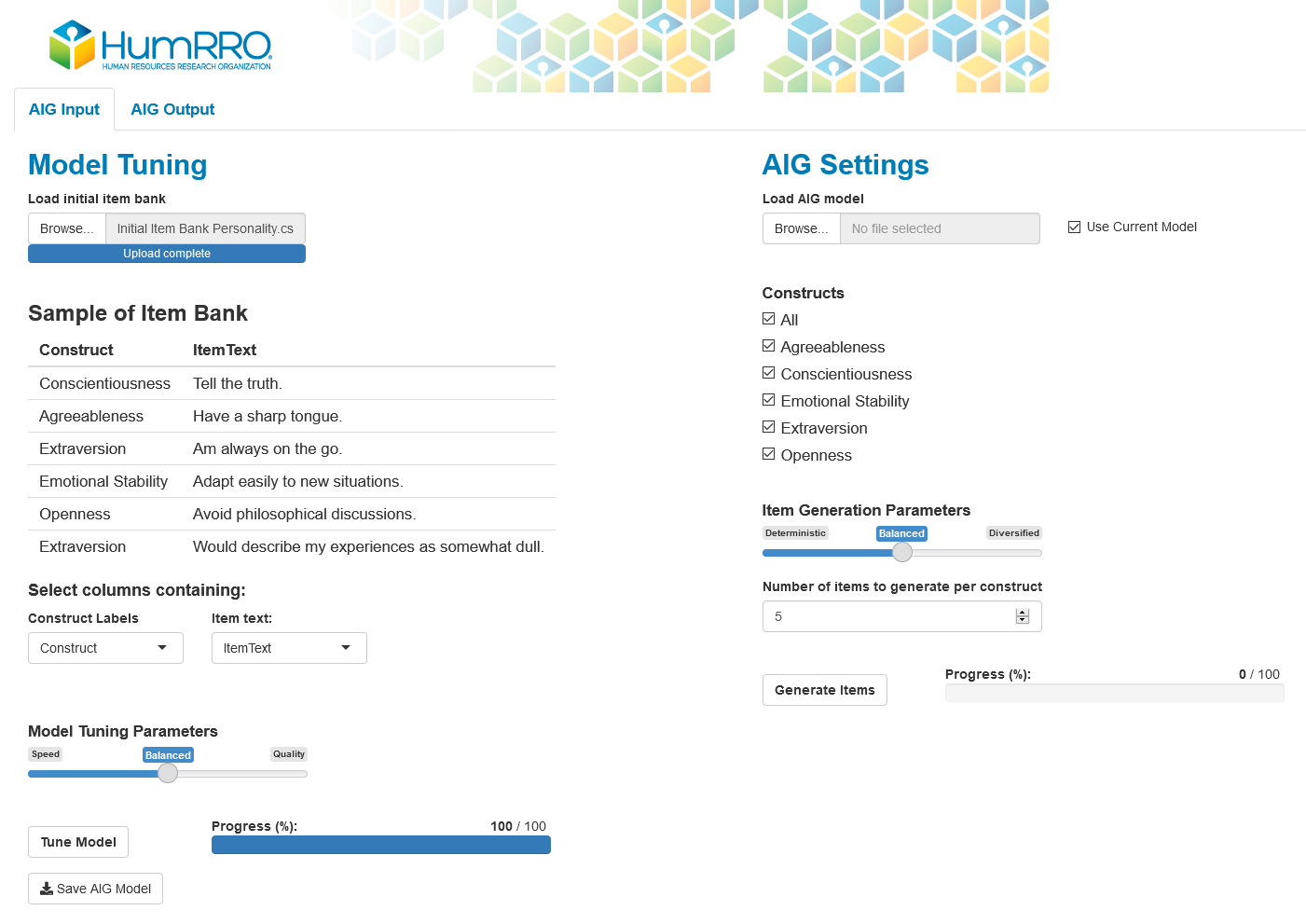

Our interface is user-friendly, designed to be understood by item or test developers without prior experience with machine learning or natural language processing. It fine tunes a natural language model on human-written test items, automatically generates new items from this model, and programmatically evaluates the quality of the generated items.

In our experience, natural language generation techniques hold great promise for achieving increased flexibility during item development. The advancements in text generation provided by NLU/NLG’s ability to comprehend and contextualize words and then predict the most plausible word or words that should follow them are compelling and exciting.

Although the application of NLU/NLG to AIG remains in its infancy, its use has surged in other areas, developing a large representation of the English language. As a result, a significant proportion of generated texts can be considered comparatively coherent to human-written text, with little to no additional editing required.

Generating Advantages

At HumRRO, we have taken advantage of NLU/NLG advances across several assessment formats, including SJTs, personality tests, and interest inventories. We’ve found that this approach generates high-quality content across all three item types.

Our approach to AIG is agnostic to the specific test content and can theoretically be applied to generate new test content for any testing program. Our experience indicates that NLU/NLG can offer huge advantages over more traditional approaches to AIG:

- Decreasing time-consuming, upfront analysis of item components or schema development to produce new items.

- Reducing reliance on human item writers, with downstream benefits of reducing costs and reallocating personnel toward more complex and less automatable tasks, such as item review.

- Lessening the impacts of biases or predispositions in item writing due to the different writing styles of individual writers.

- Integrating content that human item writers may not have considered.

- Increasing the quantity of items developed while preserving item quality, with larger item banks providing additional benefits for other aspects of test development such as form assembly and test security.

In addition to the overall benefits of using NLU/NLG for automated item generation, we also tailor our AIG models to the needs of specific test or item development programs. The customized options we provide enable the user to tune parameters available in the model development and item generation procedures. These include choosing between:

- Speed or quality in the model development procedure. Underlying these options are parameters that affect the model learning rate. Although the actual speed varies depending on the content input into the program, a quality model could take a few hours longer for the program to develop than a speedy model. A higher quality model is certainly worth the time investment for operational use, but a speedier model may be more reasonable when experimenting with different items, for example.

- Deterministic or varied items in the item generation procedure. Underlying these options are parameters that affect the amount of randomness included in the item generation procedure. More randomness means that a greater variety of item content could be obtained, with the tradeoff being higher chance of error such as nonsense items. Text cleaning steps after items are generated can help mitigate some of this error while maintaining variation.

- Construct-specific or construct-agnostic item generation. The AIG model can be tuned to understand features that characterize different constructs, such as items measuring different personality traits or items that reflect different proficiency levels. In the model development procedure, users can specify whether to train the model to learn construct labels, and if constructs are specified, users can further specify which constructs they would like to generate items for in the item generation procedure.

To further customize the interface for user preferences, these options can be presented in different formats for different complexity levels. For example, in adjusting speed or quality in model development, one user may prefer simplicity, in which case we provide a simple slider between speed and quality with the underlying parameters adjusted in the background. Another user may prefer greater control, so we also provide a version where the user is able to input values for specific model development parameters.

Ultimately, our goal is to make the process and efficiency gains of applying NLU/NLG methods to AIG accessible to everyone. If you are interested in learning more, please contact us for a demonstration.